1、准备工作

(1)主机名映射S

# vi /etc/hosts vi /etc/hosts

#127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

#::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.186.100 hadoop100注意:宿主的WIndows系统也要一样配置hadoop100并且映射到虚拟机的ip上

(2)SSH免密登录

Centos7默认安装了sshd服务,可使用ssh协议远程开启对其他主机shell的服务。

如果没有可以使用yum安装

sudo yum -y install openssl-devel

由于Hadoop集群的机器之间ssh通信默认需要输入密码,在集群运行时我们不可能为每一次通信都手动输入密码,因此需要配置机器之间的ssh的免密登录。

即使是单机伪分布式的Hadoop环境也不例外,一样需要配置本地对本地ssh连接的免密。

hadoop@hadoop100 ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory '/home/hadoop/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:yQYChs4eniVLeICaI2bCB9HopbXUBE9v0lpLjBACUnM hadoop@hadoop100

The key's randomart image is:

+---[RSA 2048]----+

|==O+Eo |

|=+.O+.= |

|Bo* o+.B |

|B%.+ .*o.. |

|OoB . .S |

| = . |

| |

| |

| |

+----[SHA256]-----+

[hadoop@hadoop100 ~]$ cd ~/.ssh/

[hadoop@hadoop100 .ssh]$ cat id_rsa.pub >> authorized_keys

[hadoop@hadoop100 .ssh]$ chmod 600 authorized_keys

[hadoop@hadoop100 .ssh]$ ssh hadoop@hadoop100

The authenticity of host 'hadoop100 (192.168.186.100)' can't be established.

ECDSA key fingerprint is SHA256:aGLhdt3bIuqtPgrFWnhgrfTKUbDh4CWVTfIgr5E5oV0.

ECDSA key fingerprint is MD5:b8:bd:b3:65:fe:77:2c:06:2d:ec:58:3a:97:51:dd:ca.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'hadoop100,192.168.186.100' (ECDSA) to the list of known hosts.

Last login: Sat Jan 9 10:16:53 2021 from 192.168.186.1

[hadoop@hadoop100 ~]$ exit

登出

Connection to hadoop100 closed.

[hadoop@hadoop100 .ssh]$大致流程:

ssh-keygen命令生成RSA加密的密钥对(公钥和私钥)- 将公钥添加到~/.ssh目录下的authorized_keys文件中

- 如机器A需要SSH连接到机器B,就需要将机器A生成的公钥发送到机器B的authorized_keys文件中进行认证

- 如果需要和自己通信,就把自己生成的公钥放在自己的authorized_keys文件中即可

- 使用ssh命令连接本地终端,如果不需要输入密码则本地的免密配置成功

(3)配置时间同步

集群中的通信和文件传输一般是以系统时间作为约定条件的。所以当集群中机器之间系统如果不一致可能导致各种问题发生,比如访问时间过长,甚至失败。所以配置机器之间的时间同步非常重要。

另外,单机伪分布式可以无需配置时间同步服务器,只需要定时使用nptdate命令校准即可。

以下操作必须切换成root用户

安装ntp

[hadoop@hadoop100 .ssh]$ su root

密码:

[root@hadoop100 .ssh]# rpm -qa | grep ntp

[root@hadoop100 .ssh]# yum install -y ntp

- rpm -qa查询是否已经安装了ntp

- 如果没有则安装ntp

修改/etc/sysconfig/ntpd,增加如下内容(让硬件时间和系统时间一起同步)

SYNC_HWCLOCK=yes(4)定时校正时间

使用ntpdate命令手动的校正时间。

[root@hadoop100 .ssh]# ntpdate cn.pool.ntp.org

9 Jan 11:49:13 ntpdate[11274]: adjust time server 185.209.85.222 offset -0.016417 sec

[root@hadoop100 .ssh]# date

2021年 01月 09日 星期六 11:49:32 CST观察时间是否与你所在的时区一致,如果不一致请查看【常见问题】寻找解决办法。

接下来使用crontab定时任务来每10分钟校准一次

# 编辑新的定时任务

[root@hadoop100 .ssh]# crontab -e

*/10 * * * * /usr/sbin/ntpdate cn.pool.ntp.org

# 查看root账户下所有定时任务

[root@hadoop100 .ssh]# crontab -l

*/10 * * * * /usr/sbin/ntpdate cn.pool.ntp.org

为了测试,先修改时间为错误时间

[root@hadoop100 .ssh]# date -s '2020-1-1 01:01'

2020年 01月 01日 星期三 01:01:00 CST 10分钟后再次查看

[root@hadoop100 .ssh]# date

2021年 01月 09日 星期六 14:12:12 CST说明自动校准已经设置

默认crontab定时任务就是开机自动启动的

[root@hadoop100 .ssh]# systemctl list-unit-files | grep crond

crond.service enabled (5)统一目录结构

接下来切换用户为hadoop账户

[hadoop@hadoop100 opt]$ sudo mkdir /opt/download

[hadoop@hadoop100 opt]$ sudo mkdir /opt/data

[hadoop@hadoop100 opt]$ sudo mkdir /opt/bin

[hadoop@hadoop100 opt]$ sudo mkdir /opt/tmp

[hadoop@hadoop100 opt]$ sudo mkdir /opt/pkg

# 更改opt下目录的用户及其所在用户组为hadoop

[hadoop@hadoop100 opt]$ sudo chown hadoop:hadoop /opt/*

[hadoop@hadoop100 opt]$ ls

bin data download pkg tmp

[hadoop@hadoop100 opt]$ ll

总用量 0

drwxr-xr-x. 2 hadoop hadoop 6 1月 9 14:29 bin

drwxr-xr-x. 2 hadoop hadoop 6 1月 9 14:29 data

drwxr-xr-x. 2 hadoop hadoop 6 1月 9 14:29 download

drwxr-xr-x. 2 hadoop hadoop 6 1月 9 14:37 pkg

drwxr-xr-x. 2 hadoop hadoop 6 1月 9 14:36 tmp

目录规划如下:

/opt/

├── bin # shell脚本

├── data # 程序需要使用的数据

├── download # 下载的软件安装包

├── pkg # 解压方式安装的软件

└── tmp # 存放程序生成的临时文件

2、安装jdk

检查是否已经安装过JDK

[hadoop@hadoop100 download]$ rpm -qa | grep java

# 或者

[hadoop@hadoop100 download]$ yum list installed | grep java如果没有jdk或者jdk版本低于1.8,则重新安装jdk1.8

下载安装包到/opt/download/然后解压到/opt/pkg

[hadoop@hadoop100 download]$ tar -zxvf jdk-8u261-linux-x64.tar.gz

[hadoop@hadoop100 download]$ mv jdk1.8.0_261 /opt/pkg/java配置java环境变量

确认jdk的解压路径

[hadoop@hadoop100 java]$ pwd

/opt/pkg/java编辑/etc/profile.d/env.sh配置文件(没有则创建)

[hadoop@hadoop100 java]$ sudo vim /etc/profile.d/env.sh在后面添加新的环境变量配置

# JAVA_HOME

export JAVA_HOME=/opt/pkg/java

export PATH=$JAVA_HOME/bin:$PATH

使新的环境变量立刻生效

[hadoop@hadoop100 java]$ source /etc/profile.d/env.sh验证环境变量

[hadoop@hadoop100 java]$ java -version

[hadoop@hadoop100 java]$ java

[hadoop@hadoop100 java]$ javac3、安装Hadoop

-

解压

[hadoop@hadoop100 hadoop]$ tar -zxvf hadoop.tar.gz -C /opt/pkg/ -

编辑

/etc/profile.d/env.sh配置文件,添加环境变量# JAVA_HOME export JAVA_HOME=/opt/pkg/java export PATH=$JAVA_HOME/bin:$PATH # HADOOP_HOME export HADOOP_HOME=/opt/pkg/hadoop export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH -

使新的环境变量立刻生效

[hadoop@hadoop100 opt]$ source /etc/profile.d/env.sh -

验证

[hadoop@hadoop100 opt]$ hadoop version -

修改相关命令执行环境

hadoop/etc/hadoop/hadoop-env.sh - hadoop命令执行环境

# The java implementation to use. export JAVA_HOME=/opt/pkg/java- 修改JAVA_HOME为真实JDK路径即可

-

hadoop/etc/hadoop/yarn.env.sh - yarn命令执行环境

# export JAVA_HOME=/home/y/libexec/jdk1.6.0/ export JAVA_HOME=/opt/pkg/java- 添加JAVA_HOME为真实JDK路径即可

-

hadoop/etc/hadoop/mapred.env.sh - map reducer命令执行环境

# export JAVA_HOME=/home/y/libexec/jdk1.6.0/ export JAVA_HOME=/opt/pkg/java- 添加JAVA_HOME为真实JDK路径即可

-

修改配置-实现伪分布式环境,来到 hadoop/etc/hadoop/,修改以下配置文件

hadoop/etc/hadoop/core-site.xml - 核心配置文件

fs.defaultFS hdfs://hadoop100:8020 hadoop.tmp.dir /opt/pkg/hadoop/data/tmp io.file.buffer.size 4096 fs.trash.interval 10080 -

主机名修改成本机的主机名

-

hadoop.tmp.dir十分重要,保存这个hadoop集群中namenode和datanode的所有数据

-

-

hadoop/etc/hadoop/hdfs-site.xml - HDFS相关配置

dfs.replication 1 dfs.namenode.secondary.http-address hadoop100:9868 dfs.namenode.http-address hadoop100:9870 dfs.permissions false -

dfs.replication默认是3,为了节省虚拟机资源,这里设置为1

-

全分布式情况下,SecondaryNameNode和NameNode 应分开部署

-

dfs.namenode.secondary.http-address默认就是本地,如果是伪分布式可以不用配置

-

-

hadoop/etc/hadoop/mapred-site.xml - mapreduce 相关配置

mapreduce.framework.name yarn mapreduce.jobhistory.address hadop100:10020 mapreduce.jobhistory.webapp.address hadoop100:19888 yarn.app.mapreduce.am.env HADOOP_MAPRED_HOME=/opt/pkg/hadoop mapreduce.map.env HADOOP_MAPRED_HOME=/opt/pkg/hadoop mapreduce.reduce.env HADOOP_MAPRED_HOME=/opt/pkg/hadoop

-

mapreduce.jobhistory相关配置是可选配置,用于查看MR任务的历史日志

- 这里主机名千万不要弄错,不然任务执行会失败,且不容易找原因

- 需要手动启动MapReduceJobHistory后台服务才能在Yarn的页面打开历史日志

- 这里主机名千万不要弄错,不然任务执行会失败,且不容易找原因

-

-

配置 yarn-site.xml

yarn.resourcemanager.hostname hadoop100 yarn.nodemanager.aux-services mapreduce_shuffle yarn.log-aggregation-enable true yarn.log-aggregation.retain-seconds 604800 yarn.nodemanager.vmem-check-enabled false yarn.nodemanager.pmem-check-enabled false -

workers DataNode 节点配置

vi workers [hadoop@hadoop100 hadoop]$ vi workers hadoop100- 如果是伪分布式可以不进行修改,默认是localhost, 也可以改成本机的主机名

-

全分布式配置则需要每行输入一个DataNode主机名

- 注意不要有空格和空行,因为其他脚本会获取相关主机名信息

4、格式化名称节点

格式化:HDFS(NameNode)

hadoop@hadoop100 hadoop]$ hdfs namenode -format

21/01/09 19:27:21 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hadoop100/192.168.186.100

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.3

************************************************************/

21/01/09 19:27:21 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

21/01/09 19:27:21 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-08318e9e-e202-48f3-bcb1-548ca50310c9

21/01/09 19:27:22 INFO util.GSet: Computing capacity for map BlocksMap

21/01/09 19:27:22 INFO util.GSet: VM type = 64-bit

21/01/09 19:27:22 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB

21/01/09 19:27:22 INFO util.GSet: capacity = 2^21 = 2097152 entries

21/01/09 19:27:22 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

21/01/09 19:27:22 INFO blockmanagement.BlockManager: defaultReplication = 1

21/01/09 19:27:22 INFO blockmanagement.BlockManager: maxReplication = 512

21/01/09 19:27:22 INFO blockmanagement.BlockManager: minReplication = 1

21/01/09 19:27:22 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

21/01/09 19:27:22 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

21/01/09 19:27:22 INFO blockmanagement.BlockManager: encryptDataTransfer = false

21/01/09 19:27:22 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

21/01/09 19:27:22 INFO namenode.FSNamesystem: fsOwner = hadoop (auth:SIMPLE)

21/01/09 19:27:22 INFO namenode.FSNamesystem: supergroup = supergroup

21/01/09 19:27:22 INFO namenode.FSNamesystem: isPermissionEnabled = false

21/01/09 19:27:22 INFO namenode.FSNamesystem: HA Enabled: false

21/01/09 19:27:22 INFO namenode.FSNamesystem: Append Enabled: true

21/01/09 19:27:23 INFO common.Storage: Storage directory /opt/pkg/hadoop/data/tmp/dfs/name has been successfully formatted.

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop100/192.168.186.100

************************************************************/-

注意格式化后会在hdfs-site.xml中指定的hadoop.tmp.dir目录中生成相关数据

-

其中NameNode和DataNode的数据文件夹中应保存着一致的ClusterID(CID)

[hadoop@hadoop100 hadoop]$ cat /opt/pkg/hadoop/data/tmp/dfs/name/current/VERSION #Sat Jan 09 19:27:23 CST 2021 namespaceID=637773384 clusterID=CID-08318e9e-e202-48f3-bcb1-548ca50310c9 cTime=0 storageType=NAME_NODE blockpoolID=BP-1926974917-192.168.186.100-1610191643341 layoutVersion=-63 [hadoop@hadoop100 hadoop]$ cat /opt/pkg/hadoop/data/tmp/dfs/data/current/VERSION #Sat Jan 09 19:33:49 CST 2021 storageID=DS-6abf02d0-274c-4b7a-9d1d-05ed7d73636a clusterID=CID-08318e9e-e202-48f3-bcb1-548ca50310c9 cTime=0 datanodeUuid=44ff2304-01b1-4d8a-8a42-a2ad6e62ebba storageType=DATA_NODE layoutVersion=-56- 如果多次格式化就会导致NamdeNode新生成的CID和DataNode不一致

- 解决办法,停止集群,将DN的CID修改成和NN的CID一致,再启动集群

5、运行和测试

启动Hadoop环境,刚启动Hadoop的HDFS系统后会有几秒的安全模式,安全模式期间无法进行任何数据处理,这也是为什么不建议使用start-all.sh脚本一次性启动DFS进程和Yarn进程,而是先启动dfs后过30秒左右再启动Yarn相关进程。

启动DFS进程:

[hadoop@hadoop100 hadoop]$ start-dfs.sh

Starting namenodes on [hadoop100]

hadoop100: starting namenode, logging to /opt/pkg/hadoop/logs/hadoop-hadoop-namenode-hadoop100.out

hadoop100: starting datanode, logging to /opt/pkg/hadoop/logs/hadoop-hadoop-datanode-hadoop100.out

Starting secondary namenodes [hadoop100]

hadoop100: starting secondarynamenode, logging to /opt/pkg/hadoop/logs/hadoop-hadoop-secondarynamenode-hadoop100.out

启动YARN进程:

[hadoop@hadoop100 hadoop]$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /opt/pkg/hadoop/logs/yarn-hadoop-resourcemanager-hadoop100.out

hadoop100: starting nodemanager, logging to /opt/pkg/hadoop/logs/yarn-hadoop-nodemanager-hadoop100.out启动MapReduceJobHistory后台服务 - 用于查看MR执行的历史日志

[hadoop@hadoop100 mapreduce]$ mr-jobhistory-daemon.sh start historyserver停止集群(可以做成脚本)

stop-dfs.sh

stop-yarn.sh

# 已过时 mr-jobhistory-daemon.sh stop historyserver

mapred --daemon stop historyserver单个进程逐个启动

# 在主节点上使用以下命令启动 HDFS NameNode: # 已过时 hadoop-daemon.sh start namenode hdfs --daemon start namenode # 在主节点上使用以下命令启动 HDFS SecondaryNamenode: # 已过时 hadoop-daemon.sh start secondarynamenode hdfs --daemon start secondarynamenode # 在每个从节点上使用以下命令启动 HDFS DataNode: # 已过时 hadoop-daemon.sh start datanode hdfs --daemon start datanode # 在主节点上使用以下命令启动 YARN ResourceManager: # 已过时 yarn-daemon.sh start resourcemanager yarn --daemon start resourcemanager # 在每个从节点上使用以下命令启动 YARN nodemanager: # 已过时 yarn-daemon.sh start nodemanager yarn --daemon start nodemanager以上脚本位于

$HADOOP_HOME/sbin/目录下。如果想要停止某个节点上某个角色,只需要把命令中的start 改为stop 即可。

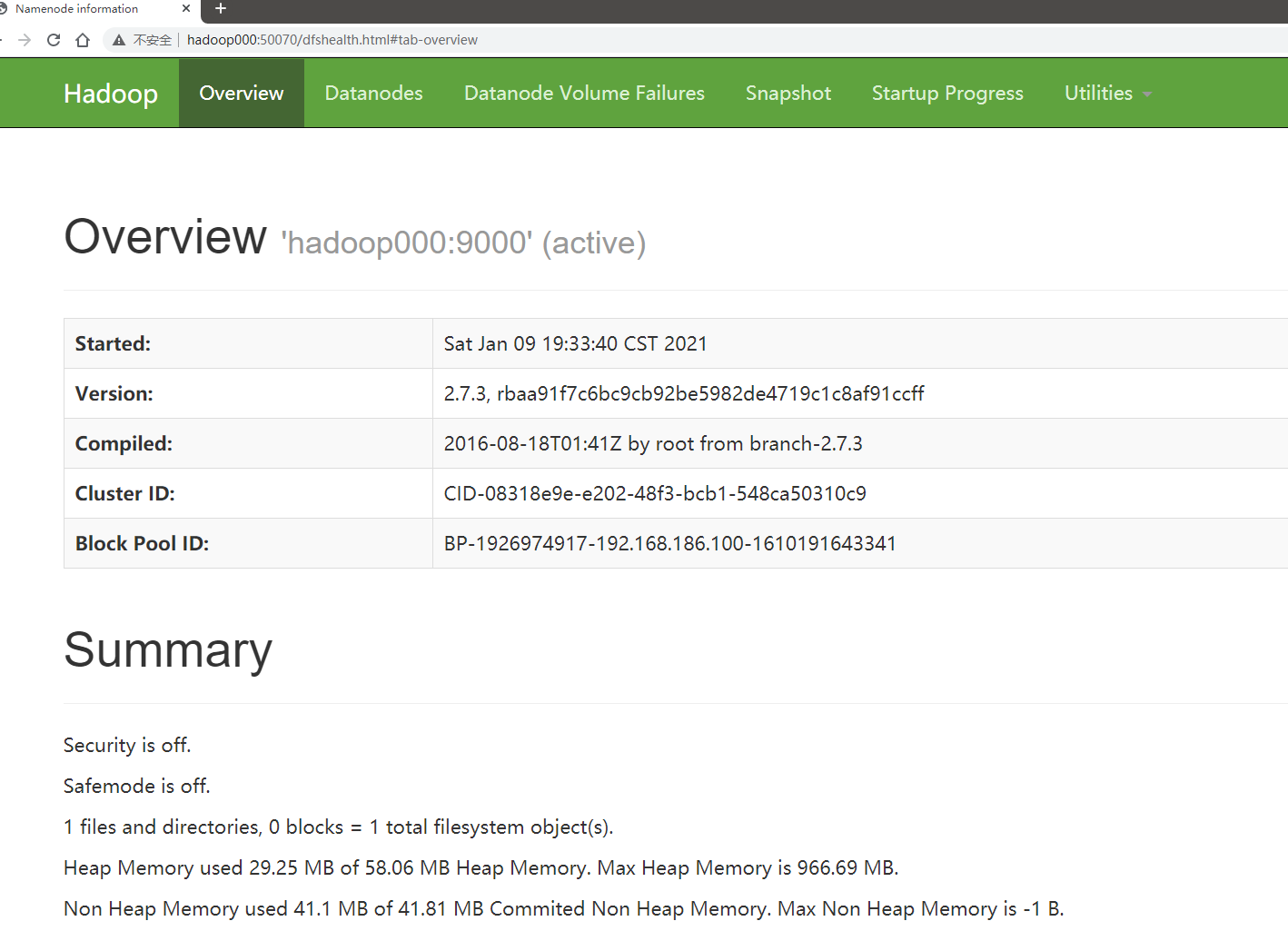

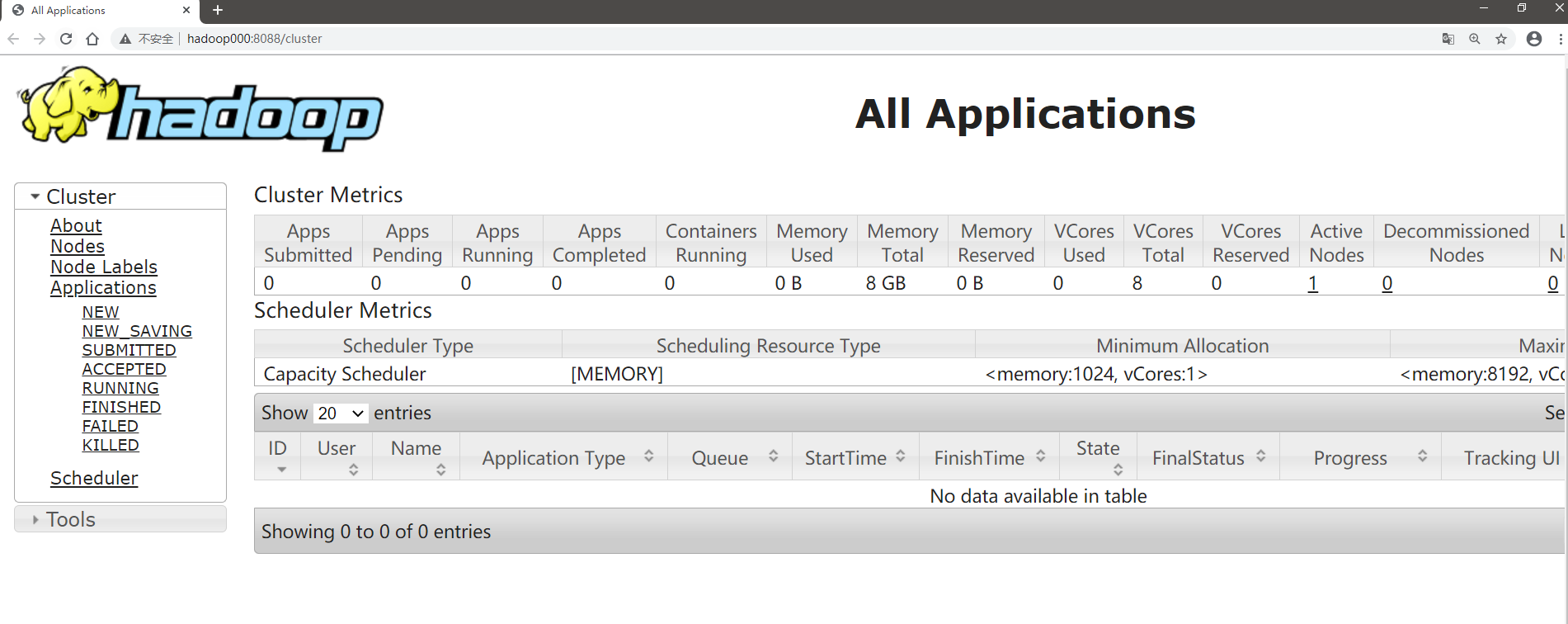

Web界面进行验证

执行jps命令,看看是否会有如下进程:

[hadoop@hadoop100 hadoop]$ jps

14608 NodeManager

14361 SecondaryNameNode

14203 DataNode

14510 ResourceManager

14079 NameNode

14910 Jps使用官方自带的示例程序测试

[hadoop@hadoop100 mapreduce]$ cd /opt/pkg/hadoop/share/hadoop/mapreduce

[hadoop@hadoop100 mapreduce]$ hadoop jar hadoop-mapreduce-examples-2.7.3.jar wordcount

Usage: wordcount [...]

准备输入文件,并上传到HDFS系统

[hadoop@hadoop100 input]$ cat /opt/data/mapred/input/wc.txt

hadoop hadoop hadoop

hi hi hi hello hadoop

hello world hadoop

[hadoop@hadoop100 input]$ hadoop fs -mkdir -p /input/wc

[hadoop@hadoop100 input]$ hadoop fs -put wc.txt /input/wc/

Found 1 items

-rw-r--r-- 1 hadoop supergroup 62 2021-01-09 20:15 /input/wc/wc.txt

[hadoop@hadoop100 input]$ hadoop fs -cat /input/wc/wc.txt

hadoop hadoop hadoop

hi hi hi hello hadoop

hello world hadoop

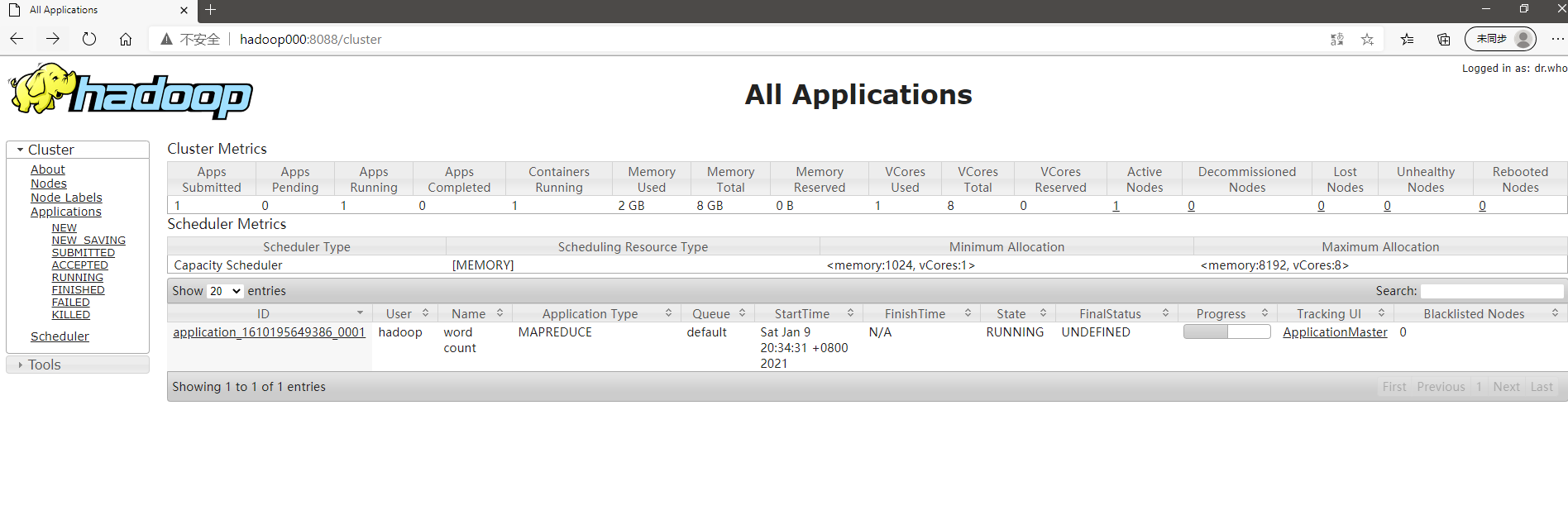

运行示例wordcount程序,并将结果输出到/output/wc之中

[hadoop@hadoop100 mapreduce]$ cd /opt/pkg/hadoop/share/hadoop/mapreduce

[hadoop@hadoop100 mapreduce]$ hadoop jar hadoop-mapreduce-examples-2.7.3.jar wordcount /input/wc/ /output/wc/

21/01/09 20:23:27 INFO client.RMProxy: Connecting to ResourceManager at hadoop100/192.168.186.100:8032

21/01/09 20:23:28 INFO input.FileInputFormat: Total input paths to process : 1

21/01/09 20:23:28 INFO mapreduce.JobSubmitter: number of splits:1

21/01/09 20:23:29 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1610194940581_0001

21/01/09 20:23:29 INFO impl.YarnClientImpl: Submitted application application_1610194940581_0001

21/01/09 20:23:29 INFO mapreduce.Job: The url to track the job: http://hadoop100:8088/proxy/application_1610194940581_0001/

21/01/09 20:23:29 INFO mapreduce.Job: Running job: job_1610194940581_0001

21/01/09 20:23:43 INFO mapreduce.Job: Job job_1610194940581_0001 running in uber mode : false

21/01/09 20:23:43 INFO mapreduce.Job: map 0% reduce 0%

21/01/09 20:23:52 INFO mapreduce.Job: map 100% reduce 0%

21/01/09 20:24:00 INFO mapreduce.Job: map 100% reduce 100%

21/01/09 20:24:01 INFO mapreduce.Job: Job job_1610194940581_0001 completed successfully

21/01/09 20:24:02 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=52

FILE: Number of bytes written=237407

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=164

HDFS: Number of bytes written=30

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=7041

Total time spent by all reduces in occupied slots (ms)=5566

Total time spent by all map tasks (ms)=7041

Total time spent by all reduce tasks (ms)=5566

Total vcore-milliseconds taken by all map tasks=7041

Total vcore-milliseconds taken by all reduce tasks=5566

Total megabyte-milliseconds taken by all map tasks=7209984

Total megabyte-milliseconds taken by all reduce tasks=5699584

Map-Reduce Framework

Map input records=3

Map output records=11

Map output bytes=106

Map output materialized bytes=52

Input split bytes=102

Combine input records=11

Combine output records=4

Reduce input groups=4

Reduce shuffle bytes=52

Reduce input records=4

Reduce output records=4

Spilled Records=8

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=146

CPU time spent (ms)=2570

Physical memory (bytes) snapshot=339308544

Virtual memory (bytes) snapshot=4163043328

Total committed heap usage (bytes)=219676672

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=62

File Output Format Counters

Bytes Written=30- 注意,输入是文件夹,可以指定多个

- 输出是一个必须不能存在的文件夹路径

- 计算结果会写入到output指定的文件夹中

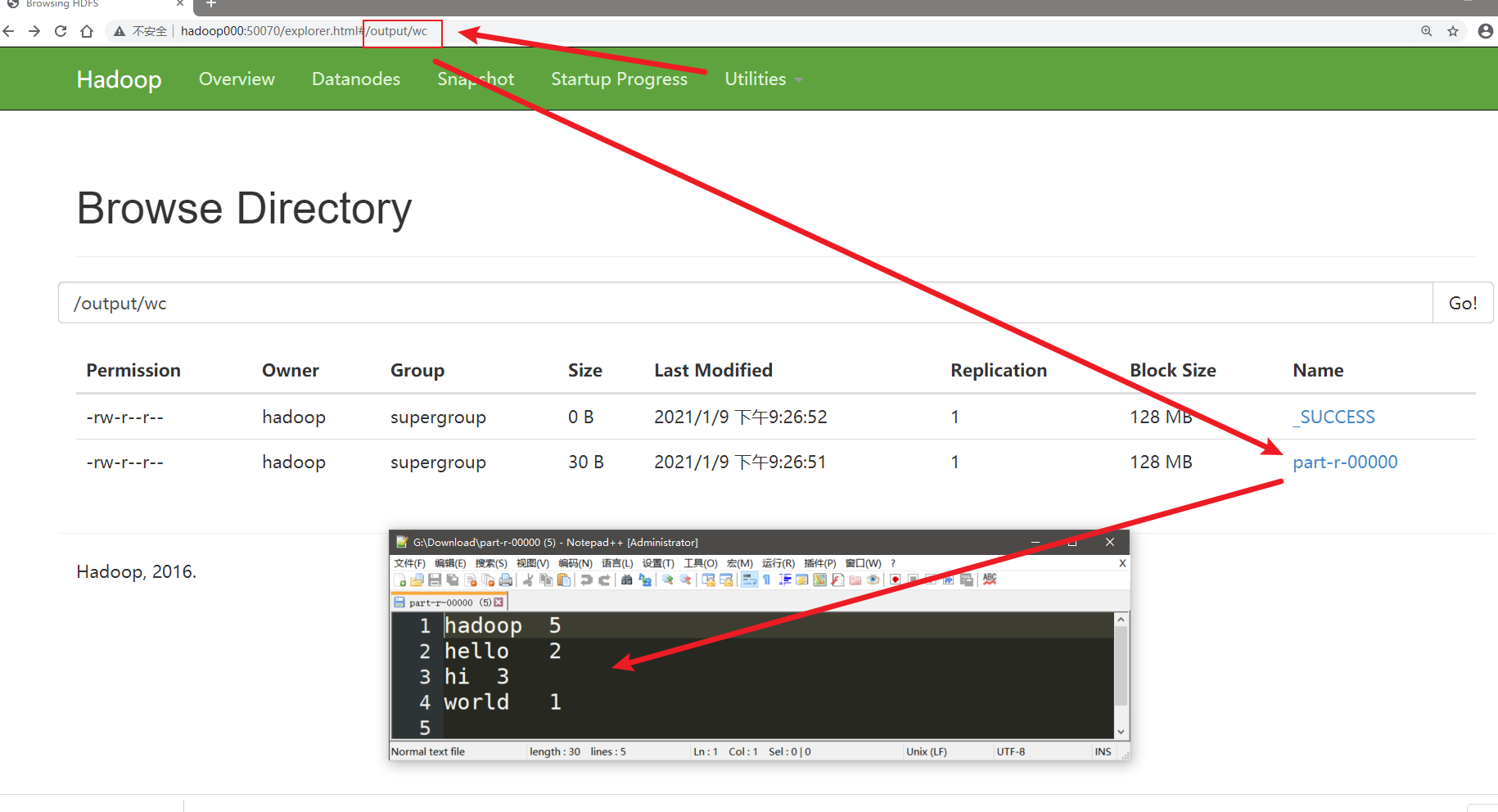

查看保存在HDFS上的结果

[hadoop@hadoop100 mapreduce]$ hadoop fs -ls /output/wc/

Found 2 items

-rw-r--r-- 1 hadoop supergroup 0 2021-01-09 20:23 /output/wc/_SUCCESS

-rw-r--r-- 1 hadoop supergroup 30 2021-01-09 20:23 /output/wc/part-r-00000

[hadoop@hadoop100 mapreduce]$ hadoop fs -cat /output/wc/part-r-00000

hadoop 5

hello 2

hi 3

world 1

在MR任务执行时,可以通过Yarn的界面查看进度

执行完毕以后点击TrackingUI下的History可以查看历史日志记录

如果跳转页面报404说明没有启动JobHistoryServer服务

[hadoop@hadoop100 mapreduce]$ mr-jobhistory-daemon.sh start historyserver

也可以在HDFS的Web界面上查看结果

Utilities -> HDFS browser -> /output/wc/ -> click part-r-00000 -> download part-r-00000

[hadoop@hadoop100 hadoop]$ stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [hadoop100]

hadoop100: stopping namenode

hadoop100: stopping datanode

Stopping secondary namenodes [hadoop100]

hadoop100: stopping secondarynamenode

stopping yarn daemons

stopping resourcemanager

hadoop100: stopping nodemanager

no proxyserver to stop

6、常见问题:

(1)时间校准后仍然偏差几个小时

使用nptdate 进行时间校准后发现校准后的时间仍然偏移很多,这是因为时区的设置问题(如果是纽约时间, 则相差13个小时),修改时区的方法就是删除原来的软链。软链的位置:

ll /etc/localtime

/etc/localtime -> ../usr/share/zoneinfo/America/New_York

rm /etc/localtime删除后时间就变成了UTC时间 - 格林威治时间,与中国时间相差8个小时

接下来只需要重新生成一个指向中国时区的软链,就可以正常显示中国时区的时间了

$ sudo ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime这样时区就没问题了

(2)没有DataNode

如果多次格式化会导致NamdeNode新生成的CID和DataNode不一致

-

解决办法,

- 停止集群,将DN的CID修改成和NN的CID一致,格式化NN,再启动集群

- 建议

- 停止集群,删除hadoop.tmp.dir下的所有内容,格式化NN,再启动集群

- 不建议,会丢失数据,如果不怕丢失数据可以这样做

(3)MapReducer任务失败

有很多原因,主要是分析日志的信息

一般的原因是:

-

缺少服务进程

- 查找对应服务的日志

-

输出目录已经存在

- 删除已经存在的目录或者改为不存在的目录

-

Host无法解析

- 检查/etc/hosts的映射的主机名和ip是否准确

- 检查所有配置文件中的主机名和端口号是否正确

-

其他的错误

-

可以通过MR的历史日志进行定位

-

或者通过MR任务的Yarn日志查看任务执行细节

yarn logs -applicationId -

Views: 98