安装

-

下载

http://flume.apache.org/download.html

http://archive.apache.org/dist/flume/stable/

1wget http://archive.apache.org/dist/flume/stable/apache-flume-1.9.0-bin.tar.gz这里使用最新的 apache-flume-1.9.0版本

-

解压安装

1tar zxvf apache-flume-1.9.0-bin.tar.gz -C /opt/pkg/ -

改目录名

1mv apache-flume-1.9.0-bin/ flume -

配置环境变量,并让环境变量生效

123# FLUME 1.9.0export FLUME_HOME=/opt/pkg/flumeexport PATH=$FLUME_HOME/bin:$PATH -

修改conf/flume-env.sh,配置JDK路径(该文件事先是不存在的,需要复制一份)

复制:1cp flume-env.template.sh flume-env.sh编辑文件,并设置如下内容:

1234#设置JAVA_HOME:export JAVA_HOME = /opt/pkg/java#修改默认的内存:export JAVA_OPTS="-Xms1024m -Xmx1024m -Xss256k -Xmn2g -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:-UseGCOverheadLimit" -

将hadoop-3.1.4安装路径下的依赖的jar软链接到flume-1.9.0/lib下:

12345678$ cd /opt/pkg/flume/lib$ ln -s /opt/pkg/hadoop/share/hadoop/common/hadoop-common-3.1.4.jar ./$ ln -s /opt/pkg/hadoop/share/hadoop/common/lib/commons-configuration2-2.1.1.jar ./$ ln -s /opt/pkg/hadoop/share/hadoop/common/lib/hadoop-auth-3.1.4.jar ./$ ln -s /opt/pkg/hadoop/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar ./$ ln -s /opt/pkg/hadoop/share/hadoop/common/lib/commons-io-2.5.jar ./$ ln -s /opt/pkg/hadoop/share/hadoop/hdfs/hadoop-hdfs-3.1.4.jar ./

测试

-

验证

1234567bin/flume-ng versionflume 1.9.0Source code repository: https://git-wip-us.apache.org/repos/asf/flume.gitRevision: d4fcab4f501d41597bc616921329a4339f73585eCompiled by fszabo on Mon Dec 17 20:45:25 CET 2018From source with checksum 35db629a3bda49d23e9b3690c80737f9 -

配置Flume HDFS Sink:

在flume的conf目录新建一个log2hdfs.conf

添加如下内容:123456789101112131415161718192021222324252627282930313233343536373839404142434445464748[hadoop@hadoop100 conf]$ vi log2hdfs.conf# define the agenta1.sources=r1a1.channels=c1a1.sinks=k1# define the source#上传目录类型a1.sources.r1.type=spooldira1.sources.r1.spoolDir=/tmp/flume-logs#定义自滚动日志完成后的后缀名a1.sources.r1.fileSuffix=.FINISHED#根据每行文本内容的大小自定义最大长度4096=4ka1.sources.r1.deserializer.maxLineLength=4096# define the sinka1.sinks.k1.type = hdfs#上传的文件保存在hdfs的/flume/logs目录下a1.sinks.k1.hdfs.path = hdfs://hadoop100:8020/flume/logs/%y-%m-%d/a1.sinks.k1.hdfs.filePrefix=access_loga1.sinks.k1.hdfs.fileSufix=.loga1.sinks.k1.hdfs.batchSize=1000a1.sinks.k1.hdfs.fileType = DataStreama1.sinks.k1.hdfs.writeFormat= Text# roll 滚动规则:按照数据块128M大小来控制文件的写入,与滚动相关其他的都设置成0#为了演示,这里设置成500k写入一次a1.sinks.k1.hdfs.rollSize= 512000a1.sinks.k1.hdfs.rollCount=0a1.sinks.k1.hdfs.rollInteval=0#控制生成目录的规则:一般是一天或者一周或者一个月一次,这里为了演示设置10秒a1.sinks.k1.hdfs.round=truea1.sinks.k1.hdfs.roundValue=10a1.sinks.k1.hdfs.roundUnit= second#是否使用本地时间a1.sinks.k1.hdfs.useLocalTimeStamp=true#define the channela1.channels.c1.type = memory#自定义event的条数a1.channels.c1.capacity = 500000#flume事务控制所需要的缓存容量1000条eventa1.channels.c1.transactionCapacity = 1000#source channel sink cooperationa1.sources.r1.channels = c1a1.sinks.k1.channel = c1注意:

- a1.sources.r1.spoolDir目录如果不存在需要先创建

- a1.sinks.k1.hdfs.path目录会自动创建

- 这里的路径是`hdfs://hadoop100:8020/flume/logs/yy-mm-dd/

- 也就是每天的数据都会产生滚动日志。

- 实际应该是按天或者按周、按月来生成滚动日志。

-

启动flume

-

准备

创建/tmp/flumn-logs, 并分配权限

1sudo mkdir -p /tmp/flume-logs -

启动

执行如下命令进行启动:1flume-ng agent --conf ./conf/ -f ./conf/flume-hdfs.conf --name a1 -Dflume.root.logger=INFO,console -

测试

12# 每次执行追加一行文字echo xxxxxxxxxxxx >> /tmp/flume-logs/access_log123.log -

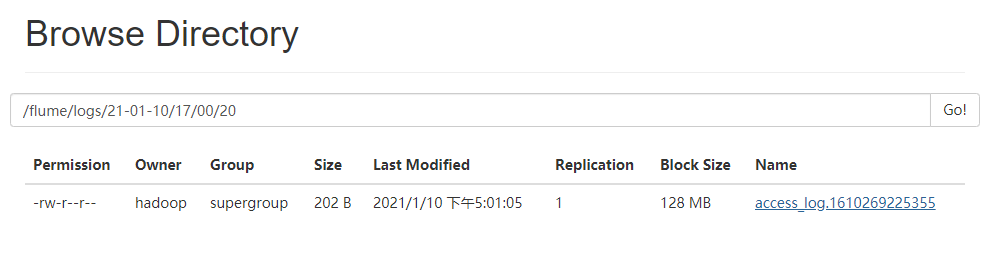

到Hadoop的控制台http://hadoop100:9870/查看

hdfs:hadoop100:9870/flume/logs/下有没有数据生成:

异常: 日志收集失败,报错:

|

1 2 3 |

2021-03-09 22:38:50,834 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:459)] process failed java.lang.NoSuchMethodError: com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V |

原因: flume/lib/guava-xxx.jar 和 hadoop自带的jar包发生冲突

解决: 将flume/lib下的guava包删除或者改名, 只保留hadoop的版本即可

Views: 117