如果使用Hadoop3.1.x,建议配合Hbase2使用,如HBase2.2.3。

Hadoop version support matrix

- √ = Tested to be fully-functional

- ! = Known to not be fully-functional, or there are CVEs so we drop the support in newer minor releases

- x = Not tested, may/may-not function

| HBase-1.4.x | HBase-1.6.x | HBase-1.7.x | HBase-2.2.x | HBase-2.3.x | |

|---|---|---|---|---|---|

| Hadoop-2.7.0 | x | x | x | x | x |

| Hadoop-2.7.1+ | √ | x | x | x | x |

| Hadoop-2.8.[0-2] | x | x | x | x | x |

| Hadoop-2.8.[3-4] | ! | x | x | x | x |

| Hadoop-2.8.5+ | ! | √ | x | √ | x |

| Hadoop-2.9.[0-1] | x | x | x | x | x |

| Hadoop-2.9.2+ | ! | √ | x | √ | x |

| Hadoop-2.10.x | ! | √ | √ | ! | √ |

| Hadoop-3.1.0 | x | x | x | x | x |

| Hadoop-3.1.1+ | x | x | x | √ | √ |

| Hadoop-3.2.x | x | x | x | √ | √ |

1、解压

|

1 |

[hadoop@hadoop100 hbase-2.2.3]$ tar -zxvf hbase-2.2.3-bin.tar.gz -C /opt/pkg/ |

2、环境变量

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

[hadoop@hadoop100 hbase-2.2.3]$ vi conf/hbase-env.sh [hadoop@hadoop100 hbase-2.2.3]$ sudo vim /etc/profile.d/env.sh # JAVA_HOME export JAVA_HOME=/opt/pkg/java export PATH=$JAVA_HOME/bin:$PATH # HADOOP_HOME export HADOOP_HOME=/opt/pkg/hadoop-3.1.4 export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH # HBASE_HOME export HBASE_HOME=/opt/pkg/hbase-2.2.3 export PATH=$HBASE_HOME/bin:$PATH |

最后使用source命令使配置生效

|

1 |

[hadoop@hadoop100 hbase-2.2.3]$ source /etc/profile.d/env.sh |

3、配置

拷贝hadoop的hdfs-site.xml和core-site.xml软链接到hbase的conf目录下面

|

1 2 3 4 5 |

# core-site.xml $ ln -s /opt/pkg/hadoop-2.7.3/etc/hadoop/hdfs-site.xml /opt/pkg/hbase-2.2.3/conf/hdfs-site.xml # hdfs-site.xml $ ln -s /opt/pkg/hadoop-2.7.3/etc/hadoop/core-site.xml /opt/pkg/hbase-2.2.3/conf/core-site.xml |

修改hbase的conf/hbase-env.sh

|

1 2 3 4 5 6 7 8 9 10 11 |

# The java implementation to use. Java 1.7+ required. # export JAVA_HOME=/usr/java/jdk1.6.0/ export JAVA_HOME=/opt/pkg/java # Configure PermSize. Only needed in JDK7. You can safely remove it for JDK8+ # export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m" # export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m" # Tell HBase whether it should manage it's own instance of Zookeeper or not. # export HBASE_MANAGES_ZK=true |

-

首先,需要修改JAVA_HOME为真实JDK路径

-

Configure PermSize.下面的2条export语句只是针对JDK7的优化,如果不用JDK7则可以删除或者注释掉

-

默认情况,由HBase自行管理内置在其内的Zookeeper服务

- 如果想要使用外部Zookeeper集群来进行管理,需要设置

1export HBASE_MANAGES_ZK=false

修改hbase的核心配置文件conf/hbase-site.xml

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

<configuration> <!-- Hbase在HDFS上的根目录 --> <property> <name>hbase.rootdir</name> <value>hdfs://hadoop100:8020/hbase</value> </property> <!-- HBase在ZooKeeper上的快照数据存储位置 --> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/opt/pkg/hadoop/data/tmp/hbase-zkdata</value> </property> <!-- 是否以集群的方式运行 --> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> <!-- Zookeeper集群的所有主机,如有多个则逗号分隔 --> <property> <name>hbase.zookeeper.quorum</name> <value>hadoop100</value> </property> </configuration> |

-

hbase.rootdir是hbase存储在HDFS上的数据的根目录,里面存储着所有region的数据

-

无论是伪分布式还是全分布式,hbase.cluster.distributed都需要设置true

- 如果设成false,则hbase和zookeeper会在一个JVM进程里运行

-

hbase.zookeeper.quorum的主机名默认是localhost,应改为zookeeper集群中所有机器的主机名,多个主机名之间使用逗号分隔

-

zookeeper的端口默认都是2181,如果不是可以添加

问题:

java.lang.IllegalStateException: The procedure WAL relies on the ability to hsync for proper operation during component failures, but the underlying filesystem does not support doing so. Please check the config value of ‘hbase.procedure.store.wal.use.hsync’ to set the desired level of robustness and ensure the config value of ‘hbase.wal.dir’ points to a FileSystem mount that can provide it.

解决:

- 在hbase-site.xml增加配置

|

1 2 3 4 |

<property> <name>hbase.unsafe.stream.capability.enforce</name> <value>false</value> </property> |

4、测试

首先启动hadoop的dfs相关进程

|

1 |

[hadoop@hadoop100 lib]$ start-dfs.sh |

等半分钟后启动Hbase

|

1 2 3 4 |

[hadoop@hadoop100 lib]$ start-hbase.sh hadoop100: starting zookeeper, logging to /opt/pkg/hbase-2.2.3/logs/hbase-hadoop-zookeeper-hadoop100.out starting master, logging to /opt/pkg/hbase-2.2.3/logs/hbase-hadoop-master-hadoop100.out starting regionserver, logging to /opt/pkg/hbase-2.2.3/logs/hbase-hadoop-1-regionserver-hadoop100.out |

使用hbase shell测试

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

[hadoop@hadoop100 lib]$ hbase shell HBase Shell; enter 'help<RETURN>' for list of supported commands. Type "exit<RETURN>" to leave the HBase Shell Version 2.2.3, r67592f3d062743907f8c5ae00dbbe1ae4f69e5af, Tue Oct 25 18:10:20 CDT 2020 hbase(main):001:0> status 1 active master, 0 backup masters, 1 servers, 0 dead, 2.0000 average load hbase(main):002:0> list TABLE 0 row(s) in 0.0490 seconds => [] hbase(main):004:0> create 'wc','cf' 0 row(s) in 4.6740 seconds => Hbase::Table - wc hbase(main):005:0> put 'wc','hello', 'cf:word', 'word' 0 row(s) in 0.2030 seconds hbase(main):006:0> scan 'wc' ROW COLUMN+CELL hello column=cf:word, timestamp=1610209834376, value=word 1 row(s) in 0.0590 seconds hbase(main):007:0> |

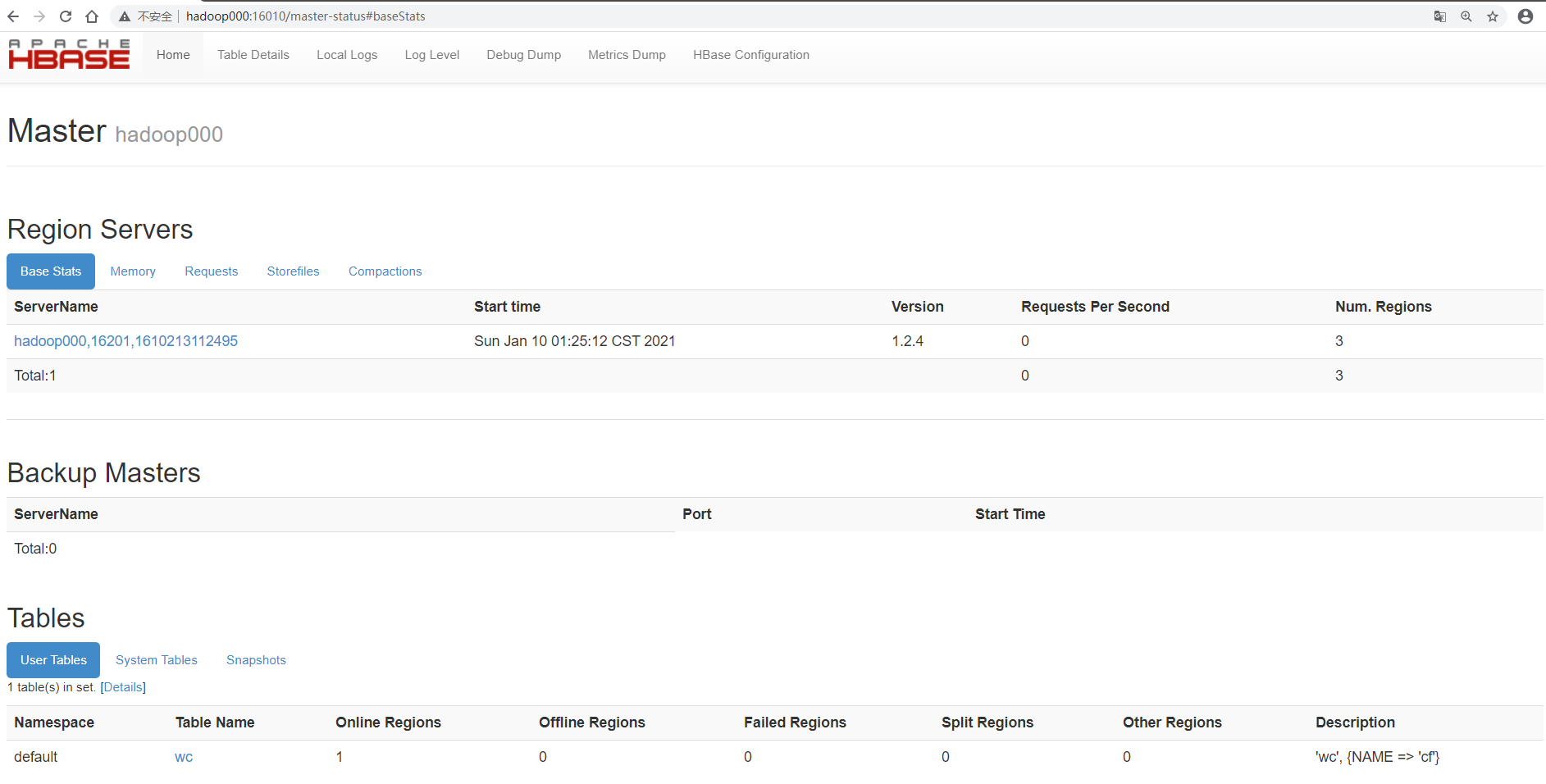

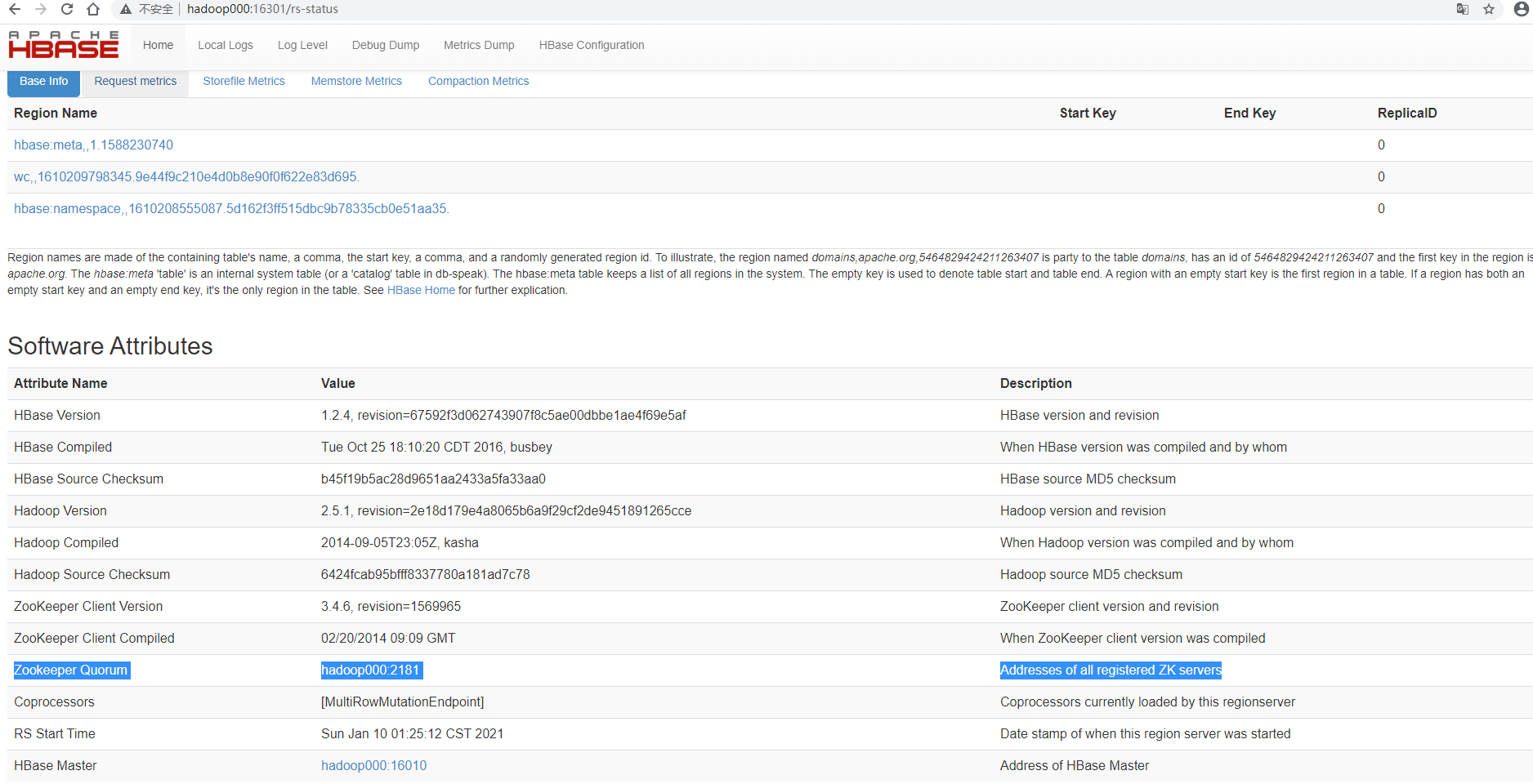

界面

hbase的Master管理界面地址: http://hadoop100:16010

hbase的RegionServer管理界面地址: http://hadoop100:16301

常见问题

注意:如果启动HBase Shell时遇到警告:

|

1 2 |

[hadoop@hadoop100 hbase-2.2.3]$ hbase shell SLF4J: Class path contains multiple SLF4J bindings. |

原因是hadoop和hbase的jar包冲突了,解决办法,将hbase/lib下面的相同slf4j-log4j12的jar包改名即可

|

1 |

[hadoop@hadoop100 lib]$ mv slf4j-log4j12-1.7.5.jar slf4j-log4j12-1.7.5.jar.backup |

Views: 28